Robot teaching in the VR environment

We study "the VR robot instruction system" which presents the environment where the robot was put by the application of the virtual reality technology to the human, analyzes the human intention from the operation in the virtuality environment, and teaches operation to the real robot of the remoteness based on it.

The research of robot teaching system which applied artificial actuality

This study has done the teaching to the human type robot in remote location like outer space with the purpose.

Robot programming and direct teaching playback which are the conventional instruction method are difficult of the application to human type robot who the information of the position and force in the simultaneous multipoint is necessary. And, in the teaching method by the master slave, the operator is not desirable from that the control is difficult in the effect in the time delay by the communication when that there is the slave in the remote location is considered, and that it forces the resistant tension under work since the failure is not permited. Then, in this laboratory, the system which creates the robot teaching command on the basis of operation intention analyzed from a series of human operation for the work in the VR environment has been constructed.

[[The conceptual scheme of the system is here]]

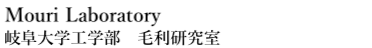

In this system, actual working space where the robot is being put is constructed on the computer as VR space.

The teaching person demonstrates the operation which wants to teach the robot in the working space of this virtuality ( the virtual work simulation ).

The force information is presented to the operator through FFG and the visual information is presented to him through HMD and the screen of personal computer.

And, the motions of the operator are measured by third dimension position sensor and data glove, etc., and it is reflected for the VR simulation.

After the work is finished, a series of work data is analyzed by the computer, and the necessary data for the teaching from the inside is extracted, and the robot teaching commands are created.

The robot teaching commands are sent actual robot system, after the effectiveness is confirmed by VR robot simulation.

The research of robot vision and automatic calibration

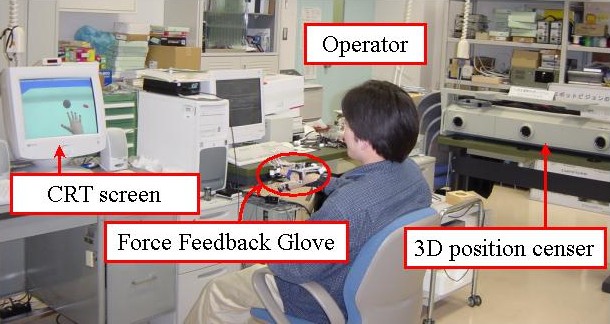

At present, the robots who are used in industry and industry field have been programed, mainly limited the work environment. Then, the robots autonomously judge the situation, and the robots who adapt to the environment are studied. In the autonomous robots, visual function for obtaining information of the externality is important, and laser and ultrasonic wave, cameras, etc. are utilized for the vision systems. However, since laser and ultrasonic wave are easy to interfere in object inside and surface, they are used for the case with special, and the camera generally and widely is utilized for the vision system. Therefore, by carrying out the camera calibration by using CCD camera in this study, the points on the object in the image located are measured. If this position measurement is established, the geometric modeling, namely object recognition, is possible by the image processing using measuring point , and the self-regulation of the robot seems to become also possible. Vision head utilized for the following in this study is explained.

Since the equal work to the robot with the human in dangerous environments such as outer space and disaster field is put on, it is necessary that sensory functions such as visual sense and auditory sense and tactile sense are given to the robot, and he is made the environmental information acquired and judged. Specifically, visual sense is the sense which is made the most important when recognizing environment around. When operating a robot, being remote, we made a vision head experimentally to imitate the head mechanism of the human for the move of the human being who becomes an operation person to be able to be sufficiently followed and to become above the dynamics characteristic of the eyes and the neck of the human. This vision head has all the 6 degrees of freedom which is equivalent to the neck elevation and pan and the two eyes elevation and pan and, they independently revolve. And, in that the size is the same as the human approximately because it imitates a human and it fixes the position relation of the turn axis, the whole weight is about 670 g including the CCD camere.

[[The mechanism of the robot is here.]]

The Research of Force Feedback Glove

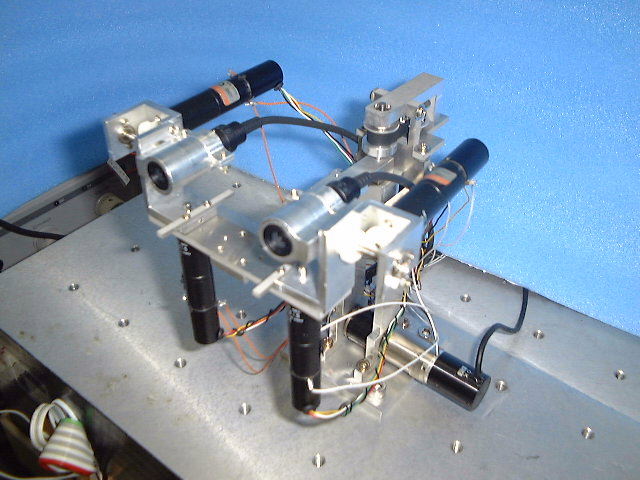

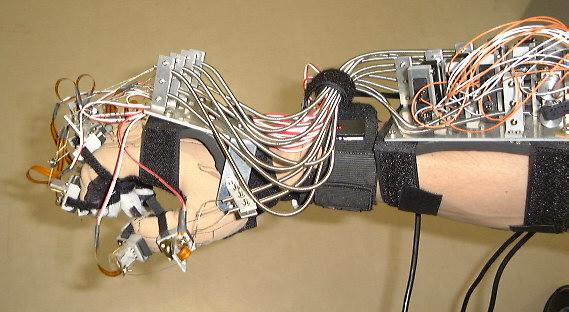

Foece Feddback Glove(iFFG)jis the glove which can be used by the robot teaching system which applied the virtual reality sense and which the human attaches. When grasping a thing in the virtual reality (VR) environment in the computer, the operator operates his hand while he sees the image which was displayed with the computer graphics. However, even if it grasps much one in the VR environment, the tactile sense to have grasped isn't gotten. Therefore, it is FFG that it is developing to make feed back the sense to have grasped a thing into the operator.

FFG constructed in our laboratory possesses 10 electric motors and 10 force sensors, which generate or sense the force at two points in each finger: the tip and the base. All the motors are used to control the forces which are fed back to the operator by the wire.

In the actual operation, Cyber Glove, which detects the angle of finger joints, is also used together.

[[The mechanism of FFG is here.]]